The Intelligence Bottleneck: Scaling AI Through Reinvention

The Intelligence Bottleneck: Scaling AI Through Reinvention

Opening Thesis

Every AI-native product eventually collides with a single constraint: your system can only be as intelligent as the feedback loops it can actually sustain.

Not compute. Not model choice. Not feature set.

The real bottleneck is the system’s ability to learn from reality.

Conceptual Contrast

Traditional Product Thinking: You ship features, collect generic analytics, and improve based on periodic feedback.

AI-Native Product Thinking: You design feedback loops first, because they are the source of your intelligence, differentiation, and compounding advantage.

One approach assumes value comes from what the product does. The other assumes value comes from how the product learns.

Deep Exploration

1. The Hidden Constraint Most Founders Miss

The temptation in AI is to over-invest in capability and under-invest in signal. You expand what the system could do, without increasing what the system can learn.

This creates an illusion of progress: more features, more automation, more demos. But intelligence stalls because the system lacks the raw material for improvement.

Without feedback loops, AI plateaus fast.

2. Why AI Products Decay Without Strong Loops

Every interaction between users and the system either sharpens the intelligence or introduces drift.

When feedback loops are weak, sporadic, or manually maintained:

assumptions solidify instead of evolving

the system stops adapting to real behavior

errors accumulate silently

and the product becomes rigid, even brittle

AI doesn’t simply “stay the same” — it decays.

3. The Founder’s Blind Spot: Learning Load

Every AI-native system absorbs cognitive load from the environment. But founders rarely track the learning load:

What volume of signal can the system handle?

How quickly is it processed?

How much correction is needed?

Where does the loop break under real usage?

Teams track velocity, roadmap, burn rate — but not learning velocity. That’s how intelligence gets stuck.

Framework — The Four Bottlenecks of Intelligence

To understand where your system is constrained, examine these four bottlenecks:

1. Capture Bottleneck

Can the system capture the right data at the moment the behavior happens?

2. Interpretation Bottleneck

Can it interpret the signal reliably, with low ambiguity or noise?

3. Feedback Bottleneck

Do users correct the system in ways the system can meaningfully absorb?

4. Integration Bottleneck

Does the intelligence actually modify system behavior in a controlled, measurable way?

Most early-stage AI products break in at least one of these four.

Practical Blueprint — Strengthening the Loop Today

You can improve your intelligence loops in a single day by doing the following:

1. Map the Learning Flow

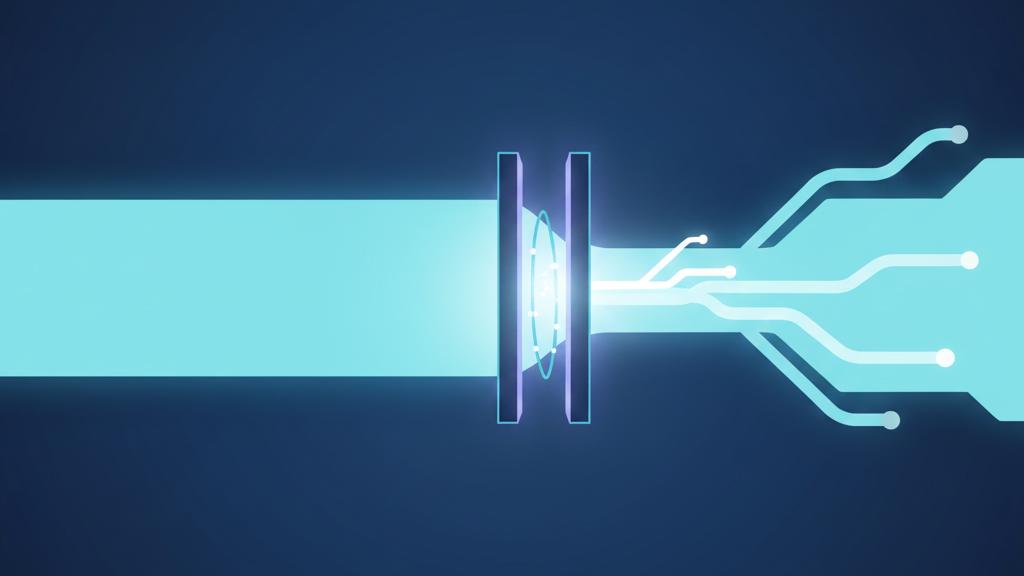

Draw a single diagram: user behavior → system capture → system output → user correction → improvement.

Identify the weakest link immediately.

2. Instrument the Correction Surface

Make it effortless for users to correct the system with minimal friction.

Examples: inline edits, toggles, thumbs up/down, draft review workflows, structured correction prompts.

3. Reduce Interpretation Ambiguity

Create constraints that make user behavior easier to understand. Think structure, not complexity.

4. Tighten the Learning Window

Shorten the time between a correction and system adaptation. When learning is delayed, intelligence loses alignment.

Founder Identity Shift

Founders who ship more features run into walls. Founders who strengthen feedback loops unlock compounding intelligence.

The identity shift is subtle but transformative:

From building capability → to building learnability. From features → to flows. From decision-maker → to designer of intelligence.

This is where AI-native momentum actually begins.

Takeaway

Your system’s intelligence is not determined by what you build into it, but by the learning loops around it.

Real advantage emerges not from what the product knows today, but from how quickly it becomes smarter tomorrow.